Input/Output Tracking in Machine Learning Inference: A Complete Guide with Inferless

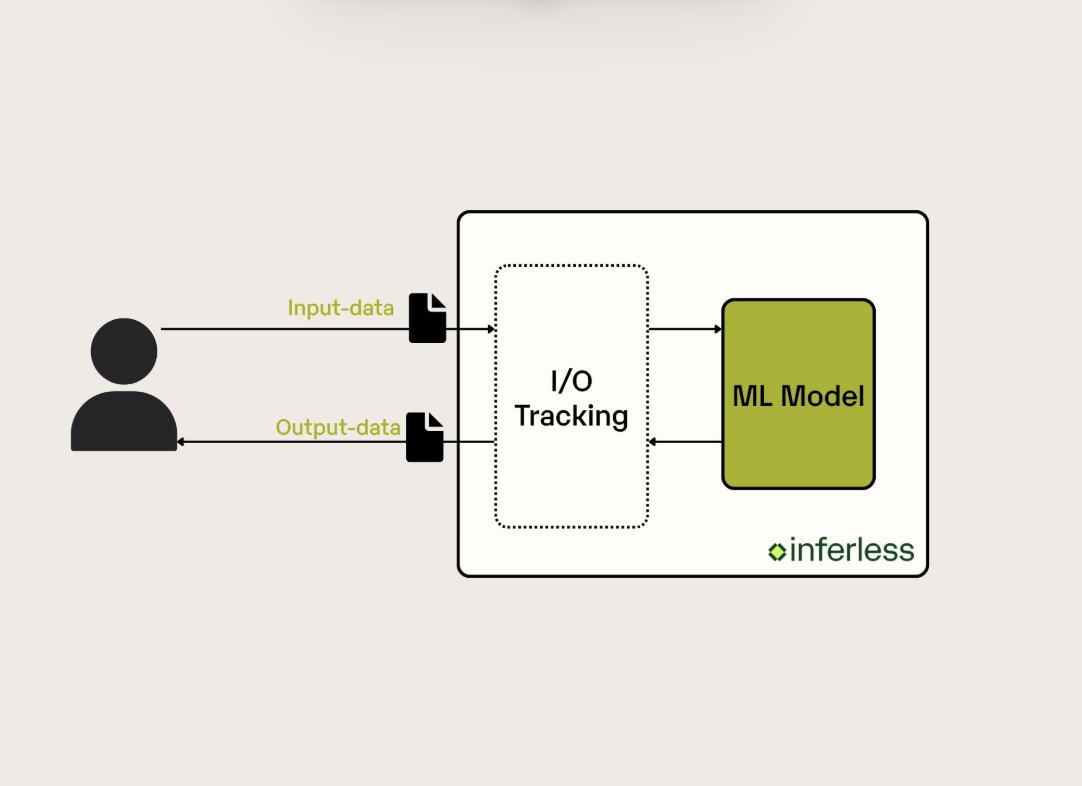

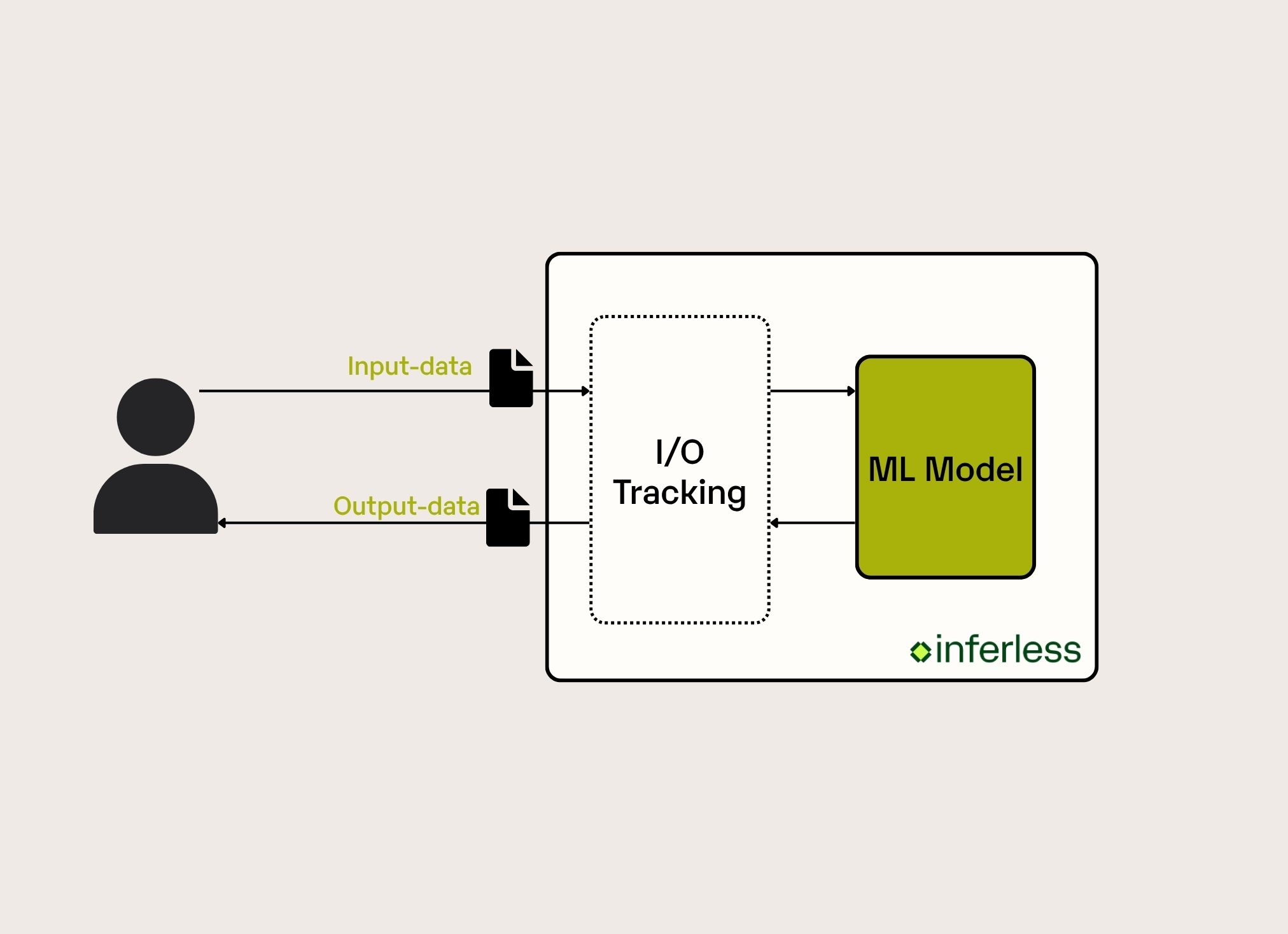

In machine learning, understanding what goes into your models and what comes out is crucial for maintaining reliable, high-performing inference systems. Today, we'll discuss about Inferless's Input/Output Tracking feature, a powerful capability that brings visibility into your ML inference pipeline.

Input/output tracking in machine learning inference refers to the systematic monitoring and logging of data that flows through your ML models during prediction time. This capability has become essential for teams looking to improve debugging processes, enhance monitoring capabilities, fine-tune performance, and optimize costs in production environments.

What is Input/Output Tracking?

Input/output tracking records the inputs sent to your models, the outputs they generate, along with associated metadata like timestamps and latency metrics.

Whether you're running computer vision models processing thousands of images per hour or natural language processing models handling complex text inputs, I/O tracking ensures you have complete visibility into your model's behaviour in production.

Why We Created Input/Output Tracking

Without proper I/O tracking, ML teams face several critical challenges:

- Debugging: When a model returns unexpected or erroneous results, pinpointing the root cause is challenging. Teams waste valuable hours attempting to reproduce problems without clear data about which inputs triggered the issues.

- Performance: Understanding why certain requests take longer to process or consume more resources becomes guesswork. Without detailed input-output data, optimizing performance is difficult and inefficient.

- Cost: Teams were spending significant amounts on inference, but couldn't identify which requests were necessary and which were wasteful. They lacked the granular data needed to optimize their spending.

Benefits of Input/Output Tracking in Inferless

Effective input/output tracking transforms these challenges into opportunities:

- Rapid Issue Resolution: Problematic inputs can be identified and reproduced quickly, dramatically reducing debugging time

- Data-Driven Performance Optimization: Clear correlations between input characteristics and system performance enable targeted improvements

- Enhanced Model Monitoring: Continuous tracking helps detect model drift, performance degradation, and anomalous behaviour

- Improved Cost Management: Detailed usage patterns help optimize resource allocation and identify cost-saving opportunities

- Better Compliance: Comprehensive audit trails support regulatory requirements and security protocols

How Inferless Implements Input/Output Tracking

Inferless's I/O tracking is designed with simplicity and transparency at its core. Our implementation focuses on comprehensive data capture and intuitive presentation through an easy-to-use interface. The platform is designed to capture detailed information about every inference request.

Inferless automatically begins capturing comprehensive data about every inference request. Every inference request is logged with detailed metadata in a structured table format. Here's what gets tracked for each request:

- Serial Number: Each request receives a unique sequence number for easy reference and tracking

- Execution Time: Processing duration measured down to milliseconds (e.g., 19.31 sec, 19.388 sec)

- Timestamps: Complete timestamp information with timezone support (e.g., 07/07/25 15:54 GMT+05:30)

- Request Status: Clear status indicators such as Completed, Failed, or Inferencing.

- Input/Output Data: Instant access to detailed input and output data for each request, enabling thorough analysis and debugging.

Real-World Use Cases & Examples

To better illustrate the practical value of Input/Output Tracking, let's examine three real-world scenarios where this feature makes a significant difference:

E-commerce Product Recommendation System

An online retailer deployed a recommendation model that suddenly started showing a 15% drop in click-through rates. The team knew something was wrong, but couldn't pinpoint the issue.

By examining the tracking data, the team can quickly identify that recommendations were failing for products with newly introduced category tags. The tracking logs showed that inputs containing these new tags were consistently producing generic recommendations instead of personalized ones.

Content Moderation for Social Media Platform

A social media platform's content moderation model was inconsistently flagging posts, leading to user complaints about both over-moderation and under-moderation. The content team needed to understand why similar posts were receiving different moderation decisions.

Inferless’s I/O tracking feature allowed the team to analyze moderation decisions, examining the relationship between post characteristics (text length, language, embedded media) and moderation outcomes. They could identify patterns in both successful and problematic moderation decisions.

Input/Output Tracking represents a significant step forward in ML inference observability and debugging capabilities. By providing comprehensive visibility into your model's behavior, this feature enables faster troubleshooting, better performance optimization, and more confident deployments.

How to Enable and Use Input/Output Tracking in Inferless

Getting started with Input/Output Tracking in Inferless is straightforward and requires no code changes to your existing models. Here's how you can enable, access, and utilize this feature:

Step 1: Enabling Input/Output Tracking During Model Deployment

During the Model Deployment Process:

- Navigate to your Inferless dashboard and begin the model deployment process

- Complete all the standard deployment configurations (model selection, compute resources, environment settings)

- At the bottom of the deployment process, look for the "Input and Output Tracking" option

- Enable the toggle for "Input and Output Tracking" to activate comprehensive I/O monitoring for your model

- Complete the deployment process - your model will now automatically capture detailed tracking data for every inference request

Step 2: Accessing Your Tracked Data

Viewing Input/Output Tracking Data:

- Navigate to your deployed model by clicking on it from your My Models page

- Click on the "API" tab to access the model's API management interface

- Locate the tracking section at the bottom of the API tab - you'll find the "Input/Output Tracking" tab

Conclusion

Inferless’s new Input/Output Tracking turns every inference request into an actionable insight, giving ML teams the clarity they need to debug faster, optimize performance, control costs, and meet compliance requirements, all without adding a single line of code.

By enabling the I/O Tracking during deployment, you gain a transparent window into your model’s real-world behavior and the confidence to iterate quickly and scale responsibly.

.png)